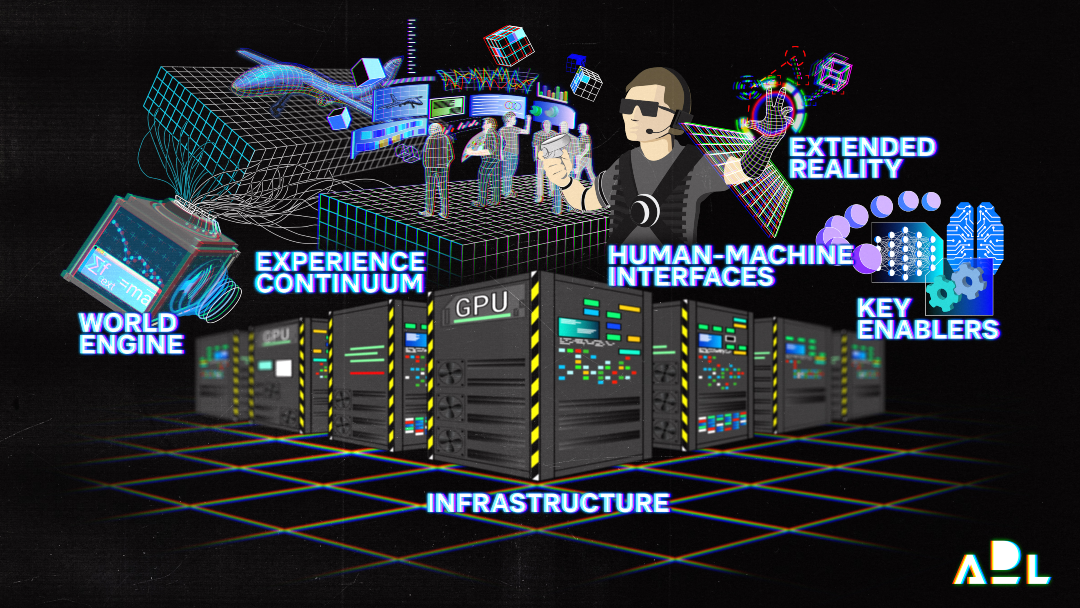

(Metaverse Layers – illustrated by Samuel Babinet for ADL)

Some of the technologies behind the Metaverse, as we defined it in our previous article, are not particularly new. However, since the Facebook (Meta) announcements in September 2021, many people have had an uneasy feeling: either the Metaverse is indeed something completely new, or else it’s simply a re-packaging of a set of technologies that have been under development for several decades.

What is the situation exactly? What are the building bricks that make up the Metaverse? What are the technologies in each of these building bricks? How mature are they? When will they become mature? When can we hope (or fear) to see the “real” advent of the Metaverse?

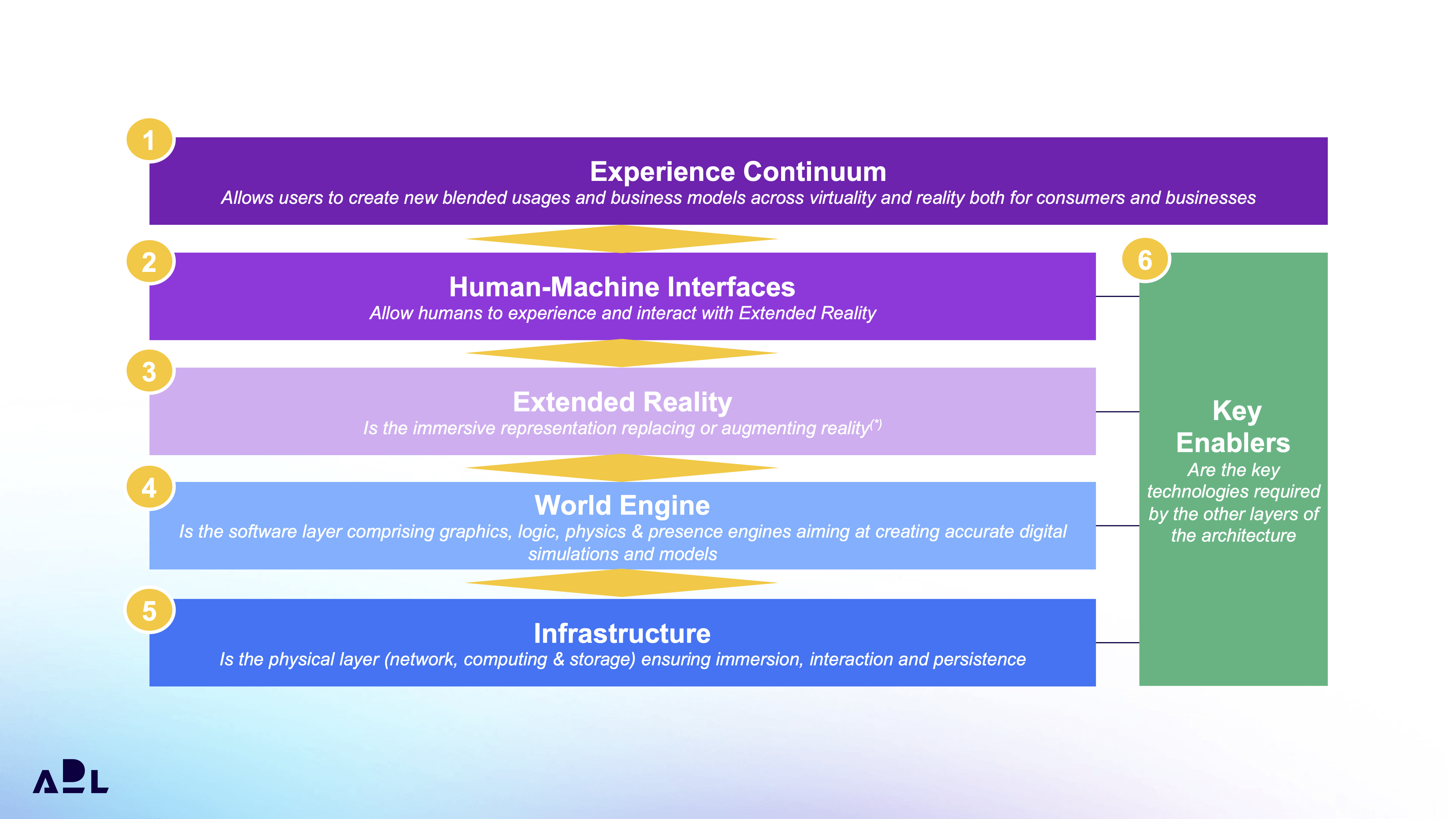

To begin answering these questions, this article introduces a framework we developed at Arthur D. Little. This framework aims to represent the architecture of the Metaverse in six layers. Six layers that cover the “value chain” of the Metaverse. Six layers whose top-level corresponds to new user experiences and business models and whose lowest level corresponds to the required hardware and software infrastructure.

Figure 1 – the six architectural layers of the Metaverse, according to Arthur D. Little

Layer #1: Experience Continuum – Use cases and business models

Layer #1, which we have named Experience Continuum, is the layer that brings together all the new use cases and new business models, existing and future. These new use cases and business models blur the boundaries between reality and virtuality, as we defined it in our previous article (thinking in particular about augmented reality and the creator economy). Like the Internet today, use cases can be segmented into three categories: individuals (socializing, entertaining, playing, etc.), companies (exchanging, collaborating, etc.), and industry (modeling a production line or distribution network).

Layer #2: Human-Machine Interfaces – The gateway

Layer #2, which we’ve named Human-Machine Interfaces (HMIs), is as the name suggests the layer that allows humans to perceive and interact with Layer #3. HMIs are the gateway to the Metaverse. HMIs include a mix of hardware and software that allows users to send inputs to the machine, and the machine to send outputs to users, and thus form a consistent interaction loop. Some of the underlying technologies, such as the keyboard and mouse on the input side, or the screens on the output side, are very mature. On the contrary, other technologies, such as brain-machine interfaces, are much less mature. Between the two, there is a whole range of more or less mature HMIs, such as virtual and/or augmented reality visors, holography, and haptic interfaces. The more these technologies advance, the more immersion, and interaction with the Metaverse will involve all of our five senses.

Layer #3: Extended Reality – The visible face

Layer #3, which we’ve named Extended Reality (XR), is the immersive representation that augments or replaces reality. It is a spectrum ranging from 100% real to 100% virtual. Extended reality combines the world and real objects with one or more layers of computer-generated virtual data, information, or presentations. Thus, the extended reality is in a way the visible face of the Metaverse. Extended reality includes virtual reality (VR) and augmented reality (AR), as well as a whole spectrum of possibilities in between. The technologies that compose it are also at varying degrees of maturity. For example, virtual reality today is much more mature than augmented reality. The more these technologies develop, the more they converge, and the more the Metaverse will be synonymous with continuity between the real and the virtual.

Layer #4: World Engine – The engine

Layer #4, which we have named World Engine, corresponds to all the software allowing the development of virtual worlds, virtual objects and their processes (digital twins), and virtual people (avatars or digital humans). The World Engine will likely evolve from today’s game engines such as Unity or Unreal and will thus have similar core architectures. The World Engine will be composed of four essential building blocks: i) The graphics engine, responsible for creating and rendering the visual layer of the virtual world; ii) The logic engine, responsible for managing interactions between various virtual entities; iii) The physics engine, allowing the creation of realistic multi-physics modeling and simulations (e.g. concerning fluid dynamics or gravity); iv) The presence engine, enables users to feel present in any location as if they were there physically (for example, the first state of presence technology can be found now in 4Dx cinemas which incorporates on-screen visuals with synchronized motion seats and environmental effects such as water, wind, fog, scent, snow and more, to enhance the action on-screen). Again, the underlying technologies have quite variable levels of maturity as we already have amazing graphics but are only making our first steps in the presence space.

Layer #5: Infrastructure – Piping

Layer #5, which we have named Infrastructure, corresponds, as its name suggests, to the physical infrastructure (network, computing power, and storage) guaranteeing the real-time collection and processing of data, communications, representations, and reactions. Infrastructure is the piping that ensures the existence of three essential properties of the Metaverse: immersion (which increasingly allows users to be “in” the Internet); interaction (which allows users to interact in real-time, as if they were in the same room, also a key requirement for immersion); and persistence (which enables the Metaverse to continue to exist even when the user is not logged in). Infrastructure is probably the least sexy layer that almost no one talks about, and yet it is the most critical: because the infrastructure required for the Metaverse does not yet exist.

Layer #6: Key Enablers – Oiling the wheels

Layer #6, which we’ve named Critical Enablers, brings together a set of technologies, mostly software, that are essential to the proper functioning of the other layers. This sixth and final layer is the oil lubricating the wheels. It brings together IoT (Internet of Things), blockchain, cybersecurity, and Artificial Intelligence (AI). The latter, for example, is necessary for the automatic generation of digital twins or for the creation of realistic avatars with realistic attitudes.

The architectural framework presented above is, for sure, a simplified view of reality – if we can talk about reality when we talk about the Metaverse ;-). In this respect, it is quite open to debate. And we encourage you to tell us what you think, or even to challenge this framework. This simplified view, however, makes it possible to analyze the complexity that underlies the Metaverse. In particular, in future articles, we will dive deep into each of the layers in order to understand the ins and outs, as well as the maturity of each of the technological elements that compose it. This approach will allow us to answer the question: should I be interested in Metaverse as part of my business? What are the opportunities today and in the future?

This article, written by Albert Meige and Michael Papadopoulos, was originally published in French on Forbes France.