What are AI-enabled HMIs?

AI-enabled human-machine interfaces (HMI) are systems that involve interactions between the technical system of a machine and an operator in a context and across various channels:

– the machine is itself a hierarchy of interconnected systems capable of real-time data acquisition, data processing, artificial intelligence, and models;

– the operator has intra-individual and inter-individual specificities and performances, including the mobilization of cognitive and training abilities for a given task;

– the channels include, but are not limited to: vision and imaging, voice and sound, touch and haptics, 2D-3D immersion, and a possible development of (direct) brain-to-machine interactions. They involve both directional machine-to-man and man-to-machine exchanges. They aim at providing information, at setting up decisions, at acting and checking the consequences of the action for the best recovery, both on the human side and on the machine side.

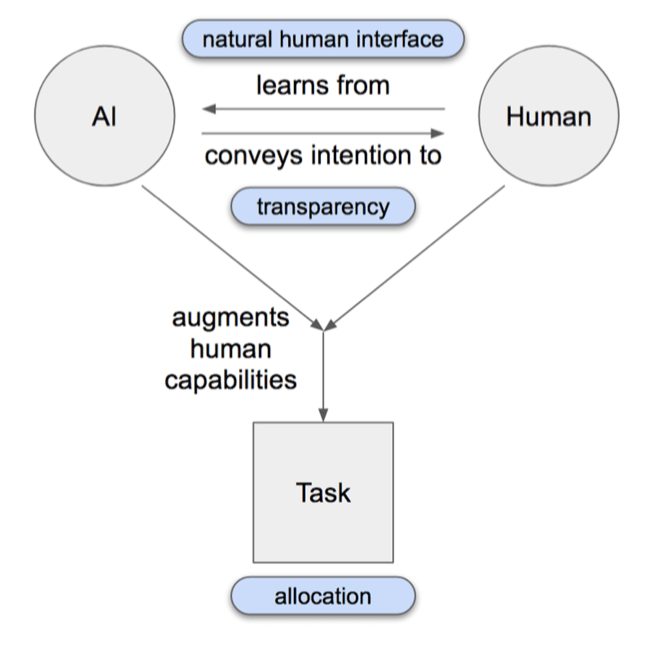

AI-enabled HMI design must take learnability, intentionality and augmentation into account

There are three characteristics of AI which need to be taken into account by HMI designers: learnability, intentionality, and augmentation.

Source: Presans

AI learnability requires a natural back-and-forth from the HMI

Learnability is the ability to make predictions about a large data set by sampling a small number of data points. This characteristic implies AI with cognitive attributes, such as unbiased observation, persistent cognizance of the environment and identification of patterns shared by different occurrences. Data as a learning source for the AI can be gathered through the HMI from a collocated human, thanks to rapid technological advances in the field of data mining, visual recognition, and natural language processing. For example, a team at NVIDIA is developing a new way to train AI for industrial robots that aims to closely mimic the way we ourselves learn, including one-shot learning. This implies that we need to prepare for a natural human-centered interface in order to train AIs effectively and efficiently, without any training interface for humans to learn how to train artificial intelligence with it. In addition, in order to figure out how well and clearly the AI learns from humans, AI-enabled HMIs should support iterative back and forth communication between humans and artificial intelligence. If human operators make a mistake while he/she is training the AI, the interface should let him/her know what mistake happened and how to recover it as fast as possible.

AI’s intentionality requires transparency

Beyond detecting, understanding, and learning the context of surrounding objects and environments, AIs can independently execute tasks based on the gathered information and knowledge. The level of automation of decision and action selection requires more or less input from humans. Applying these levels of automation to autonomous cars, artificial agents with mid-level automation would suggest to a human driver to change a route to get to the destination earlier and change the route only when the driver allows it. On the contrary, artificial intelligence with the highest level of automation would change the route without any alert or notification. In this case, a human driver cannot figure out why the autonomous car changes the route even though he/she can guess that the AI found a better route.

Even though the changed route brings better performance like arriving earlier than expected or driving along a safer way, changing lanes without any notification would make people feel insecure and eventually make it hard to trust the AI. Trust and confidence drive AI and HMI designs that involve human operators even when not strictly required, and provide explanations and visualizations that give access to the intentions of the robot.

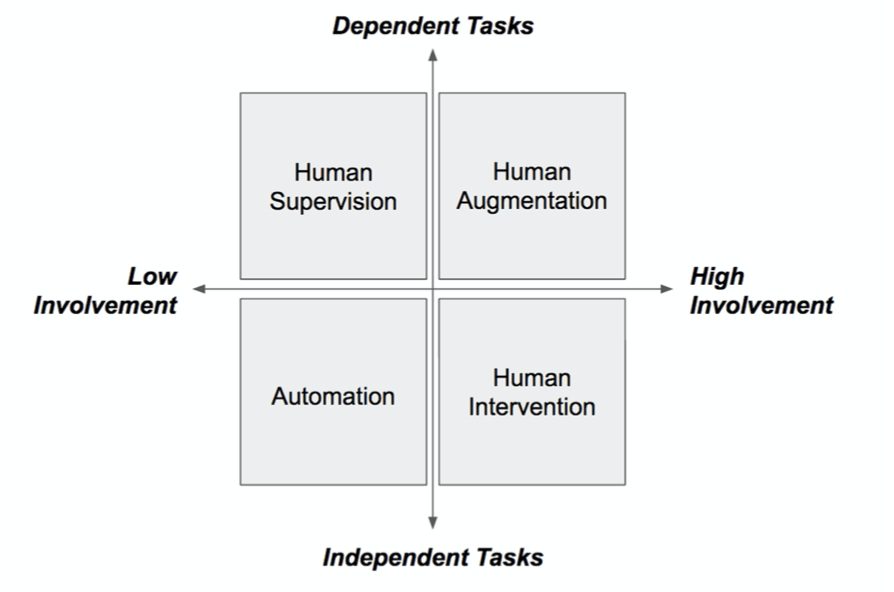

AI’s augmentation requires collaborative task allocation

There are various types of collaboration between humans and AI-enabled machines depending on two features: how much a human worker’s task is dependent on the AI-enabled machine’s task, and how much a human worker is involved with the AI-enabled machine in terms of task execution, decision-making, and error resolution. With these two features, the collaboration between humans and AI-enabled machines can be categorized into human augmentation, human supervision, human intervention, and automation.

Source: Presans

The way tasks, roles, and responsibilities are allocated to humans and AI-enabled machines is different in each collaboration category. In the case of human augmentation, AI-enabled machines take on the role of intelligent and robust coworkers. For example, Hyundai’s wearable exoskeleton supports a human worker’s arm during overhead tasks and General Motors’ RoboGlove reduces tendon strain for human workers who grip items all day long. The augmented AI-enabled machine also can support the human worker in tasks that include sizing a packaging box and giving the right amount of tape to the human worker for packing tasks. Likewise, the tasks assigned to a human worker and AI-enabled machine are on the same level of importance and difficulty, or closely related with each other. Similarly, human supervision indicates that the tasks assigned to a human worker and AI-enabled machine belong to the same flow of execution. For example, a human worker points where the packages should be delivered, then an AI-enabled machine loads the packages and delivers them to the designated location. The only difference here is the role in the task: a human worker plays the role of the boss in this job, while an AI-enabled machine takes on the role of the subordinate. Human intervention requires additional action from a human worker to take over the action of a machine or resolve a situation as quickly as possible when the machine faces unexpected difficulties.

Mapping AI-enabled HMI technologies design features can help guide breakthrough innovation roadmaps

Presans can help industrial innovators identify the design features that will optimize AI-enab

led HMI performance un learning, intentionality and augmentation.